The Comprehensive Platform for Responsible AI in the Social Sector

We help mission driven organizations navigate the AI age through an impact-first lens.

Meet the AI Age on Your Terms

We built a unified ecosystem to support you at every stage of maturity, from getting started with personal use to full scale application deployment.

Learn

Demistify AI and Experiment

Don't know where to start? We translate AI into plain English, and provide beginner friendly resources that are curated to your day-to-day.

Decide

Understand if AI is for you

We aren't pro-AI. We're pro-impact. We equip you with all you need to understand AI's limitations so you can make the informed decision of if and how AI fits into your mission.

Deploy

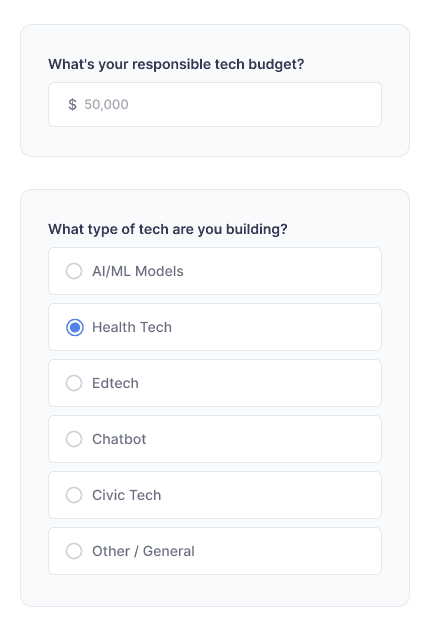

Create AI Tools for Impact

We provide tools tailored to your issue area and budget to provide end-to-end guidance on creating safe, impact-first AI solutions.

Our Neutrality Charter

NO

PAY-TO-PLAY

We do not accept payment, equity, or kickbacks to promote any resource. Our recommendations are solely based on utility.

DATA SOVERIENGTY

Our methodology is refined by aggregated user data. We do not store personal info without consent. Your IP is not sold or otherwise distributed.

Pro-Builder

Not Pro-AI

AI poses significant risks. Our ethos is centered on safely evolving alongside technology rather than advocating for its use. We address risks head-on so you know how to overcome them.